Forget Funnels: Your Next GTM Engine Is Prompt-Led

How to cross the GenAI Divide and treat your prompts as strategic IP assets

Dear GTM Strategist,

Over the last year, I’ve seen a strange paradox inside GTM teams.

Everyone is “using AI” – but few are actually winning with it.

We copy prompts. We experiment. We wire tools together.

And still, 95% of AI pilots never make it to production. The GenAI Divide is getting wider – fast.

That’s why I invited Matteo Tittarelli, GTM consultant at Genesys Growth and Co-founder of GTM Engineer School, to write this week’s guest post.

In this post, he breaks down how top GTM operators move from prompt chaos to Prompt-Led Engines – with real-world examples from teams like GrowthX and Gorgias, and a framework you can actually apply today.

You will learn:

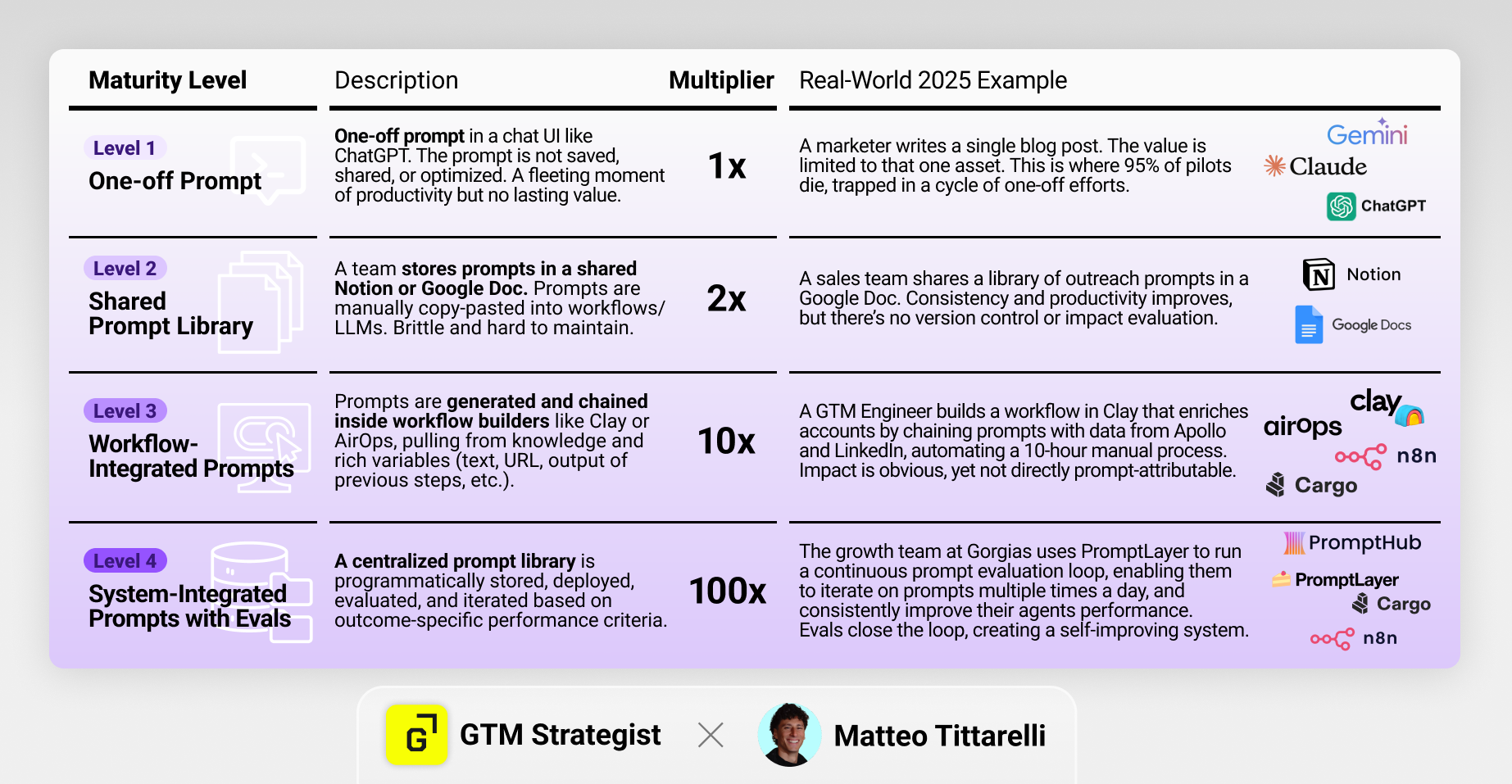

The 4 levels of prompt maturity

The P.R.O.M.P.T. framework for turning ideas into reusable assets

How to scale an agency or automate enterprise customer support with the Prompt-Led Engine

If you feel you’re sitting on AI potential you’re not fully capturing yet, this one is for you.

If you are new to all this outbound, GTM engineering and prompting craft that looks a bit like a programming language or rocket science - worry not :)

Don’t be absorbed by FOMO, we all have to start somewhere - and one of the best, most accessible ways to get your outbound GTM motion up and running quickly and affordably is Hunter.

Hunter was the first “find emails” software that I’ve ever used back in 2017 - and now, in 2025, they’ve evolved to add intent data, verifying emails, sending email sequences to leads, all in one platform. Today, I am proud that they are supporting the GTM Strategist mission.

Here is a 5-minute demo of how I found FREE contacts by prompting Hunter.

“Find me companies that are just like Clay and recently got funding” - that’s it. I could identify three amazing opportunities immediately.

The easiest way to win new business? Do your job really well, produce a strong case study, and pitch it to lookalike companies who need the same results that you already know how to deliver.

Don’t wait for decision makers to magically find your website.

Take control and reach out proactively.

Send a meaningful DM on LinkedIn or email and kickstart the conversation NOW - while they’re finalising Q1 plans. Get verified contacts with Hunter and tell them how you can help.

(free version available, start package $49/month)

Now, let’s hear it from Matteo. 👇

The Gen AI Divide

The age of AI is no longer emerging — it is dominantly. Upon. Us.

As of late 2025, a staggering 88% of organizations mentioned they use AI in at least one business function. In marketing and sales, this has led to a widespread tactical use of LLMs for everything from summarizing meetings with Granola to generating ad copy with Claude, to full-blown workflows of intent-based outbound across Clay and Smartlead, or AI content generation with n8n, AirOps, and Webflow.

But here’s the paradox nobody is talking about. While everyone is using AI — or trying to — it’s very few who are really succeeding with it.

We’re in a state of “prompt anarchy” — a tactical free-for-all grab of prompt templates, libraries, and masterclasses (even we have some!) free that’s seemingly creating value, but can also be so overwhelming that is creating some analysis paralysis.

This AI and prompting craze is the reason why we’re witnessing a massive “GenAI Divide”. On one hand, they say it’s spreading brain rot making content marketers and operators lazier. On the other hand, it’s skewing the real GTM alpha on the teams with the larger, most structured data sets — and the ambition to mine them.

The proof is in the numbers. A recent MIT report found that a shocking 95% of generative AI pilots at enterprise companies are failing to make it to production. This represents a massive waste of resources and a catastrophic failure to capture AI value.

This is compounded by two other critical business risks:

The infrastructure gap: While 77% of executives feel urgent pressure to adopt AI, only 25% believe their IT infrastructure is actually ready for enterprise-wide scaling. It’s like trying to run a Tesla on a lawnmower engine. This shows not just that we’re still early, but that to look at real innovation in AI usage, we’re better off turning to the smaller, more nimble players that are building these AI workflows software — that’s where the true AI alpha lies. More on this below.

The prompt management gap: Alex Halliday, founder of AirOps, mentioned: “Your prompts are your IP — keep them optimized and manageable like an engineer would”. But instead, most GTM teams treat prompts like disposable scripts instead of strategic assets. This leads to inconsistent quality and zero reusability — a far cry from the industry benchmark of 40-60% prompt reuse that high-performing teams achieve.

So, in an environment where 95% of initiatives fail, how can GTM leaders cross the GenAI Divide and join the top 10% mining the real GTM Alpha? How do you transform scattered, failing pilots into a cohesive, strategic, and scalable growth engine that drives measurable business results?

There’s no one-size-fits-all solution. BUT, we believe it starts with managing your prompts better — like they were actual IP to nurture, polish, and constantly refine.

And doing so in a system fashion — with what we call a Prompt-Led Engine (PLE).

This requires treating prompts with the same rigor as other critical GTM assets like ad campaigns or sales playbooks. It’s what we see top operators doing at the GTM Engineer School, where students from the likes of Ahrefs, Google, Slack, and more learn how to build automated systems across AI GTM like Clay, n8n, Cargo, Octave, and AirOps to automate 10-hour manual processes and reduce manual effort by 80%.

In this post, we’ll walk you through our systematic approach to designing, testing, managing, and deploying prompts as core components of your automated GTM strategy.

And we’ll give you examples of who’s doing it very well, so you know who to look up to for what good looks like!

Let’s dive in.

1. The 100x value multiplier of a Prompt-Led Engine

The journey from prompt anarchy to a Prompt-Led Engine isn’t just about getting organized — it’s about unlocking exponential value.

It’s a strategic shift from treating prompts as disposable commands to architecting them as durable, scalable assets.

As Brendan Short of The Signal notes, the future of GTM tech is about selling automated labor, not just software”. According to Brendan,

“The GTM company that will break out beyond a $1B valuation (and into $100B territory) will be the one that builds something that looks like labor in the form of swiping a credit card (instead of sourcing+ramping+managing people, equipped with software).”

However, as we’ve learned in our careers, you can’t “boil the ocean in one pot” — you have to think in phases, in maturity levels like Crawl, Walk, Run. This is how you build that future, one level of maturity at a time.

And here’s how you can apply this thinking to your prompt-led engine.

Level 1: The Individual Prompt

An operator uses a one-off prompt to trigger a task in a chat UI like ChatGPT or Claude. Nothing new here.

Example: A marketer uses a prompt to write a single blog post. The value is limited to that one asset. This is where 95% of AI pilots die, trapped in a cycle of one-off efforts.

You might use a specific prompt generator to structure your prompt but the prompt is not saved, shared, or optimized. It’s a dead end, offering a fleeting moment of productivity but no lasting value.

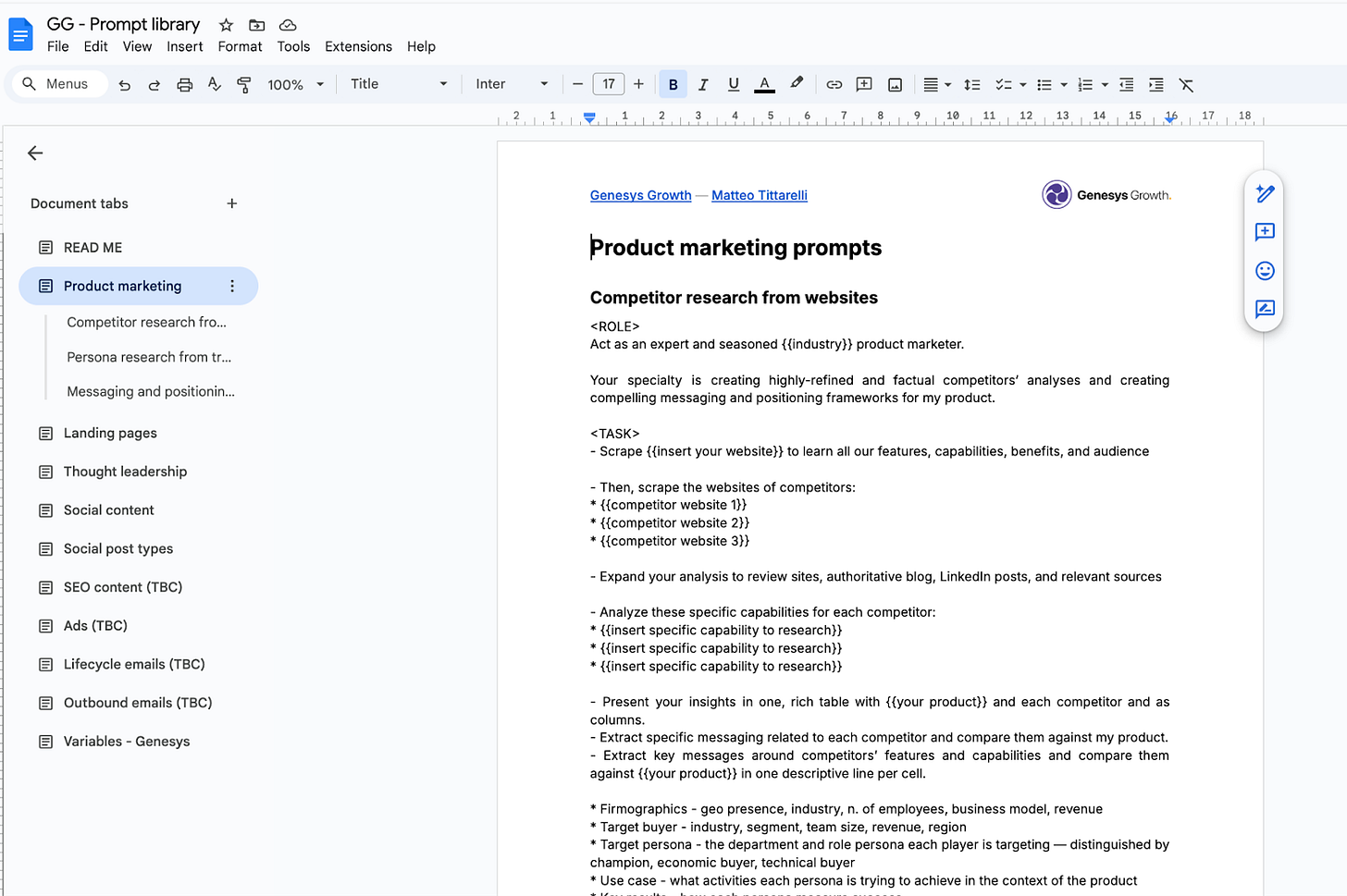

Level 2: Shared, Manual Prompt Library

A series of prompts is standardized and shared in a central prompt library repository like a shared Notion or Google Doc. Prompts are manually copy-pasted into workflows, or shared within GPT team-shared conversations. Or you might pull your prompts from your Raycast snippets as a shortcut to save time instead of manually copy-pasting.

Example: A sales team shares a library of outreach prompts in a Google Doc. Consistency and productivity improves, but there’s no version control or outcome impact evaluation.

It’s already a step up from level 1, but still brittle and super hard to maintain. If something changes, then it’s hard to trickle down the change across the team, and consistency shakes. This is where the most “AI-native” teams probably are these days — only to find out that native is just relative to who you’re comparing yourself to.

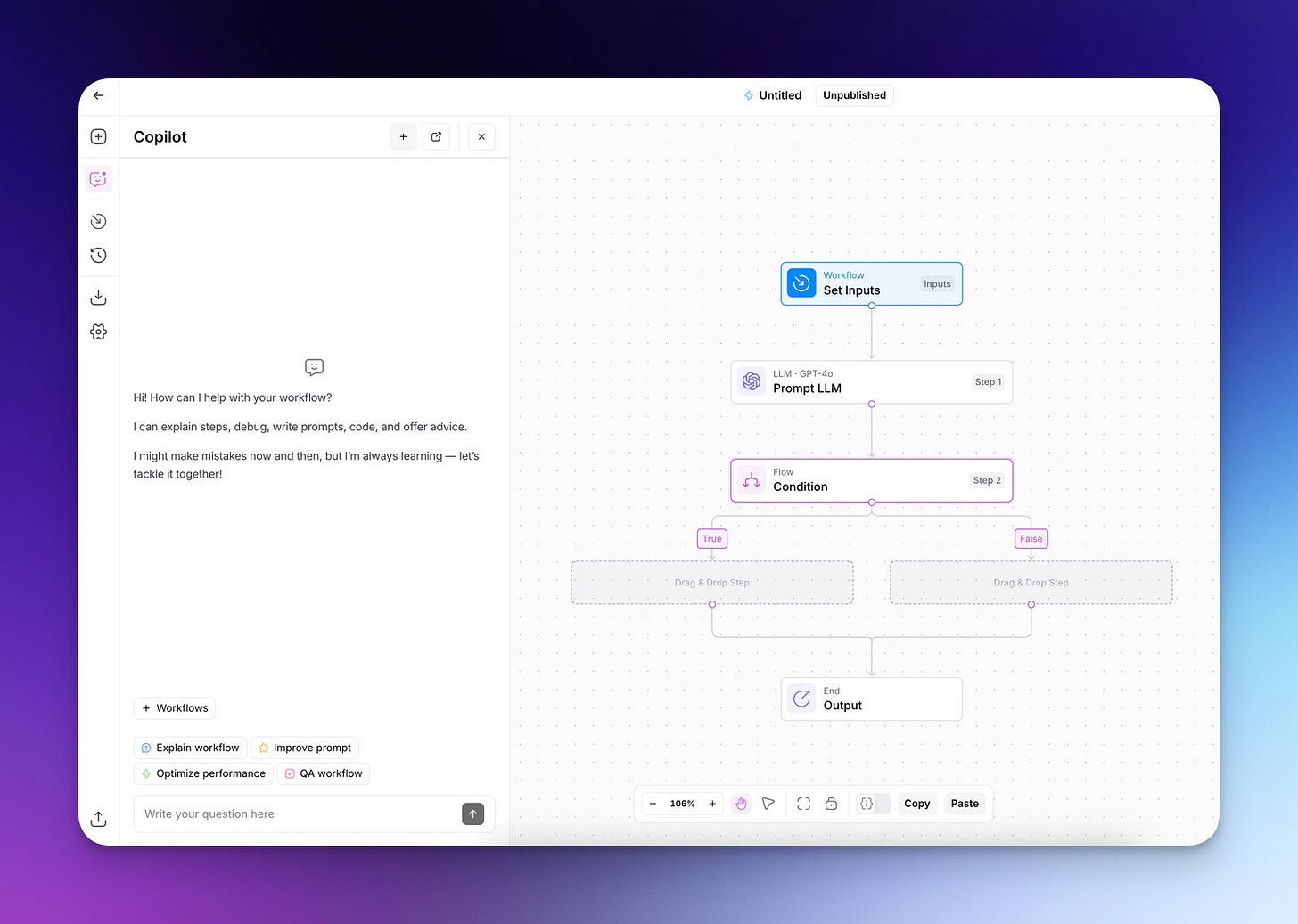

Level 3: Workflow-Integrated Prompts

The prompt is generated and embedded into an automated workflow like Clay, n8n, Cargo, or AirOps with in-line prompt generators and in-app copilots that help you refine each prompt in natural language.

Example: A GTM Engineer builds a workflow in Clay that enriches accounts by chaining prompts with data from Apollo, LinkedIn, websites, and other data sources — automating a 10-hour manual process.

This is a significant productivity step compared to level 2 — prompts pull from proprietary data like knowledge bases, website scrapes, images, rich outputs from previous workflows’ steps and triggered by manual or scheduled events. They operate as a core processing variable of the GTM workflows, becoming a more autonomous engine for growth.

Impact on the bottom line becomes obvious and more consistent, yet not directly prompt-attributable, or iterable.

Level 4: System-Integrated Prompts with Continuous Evals

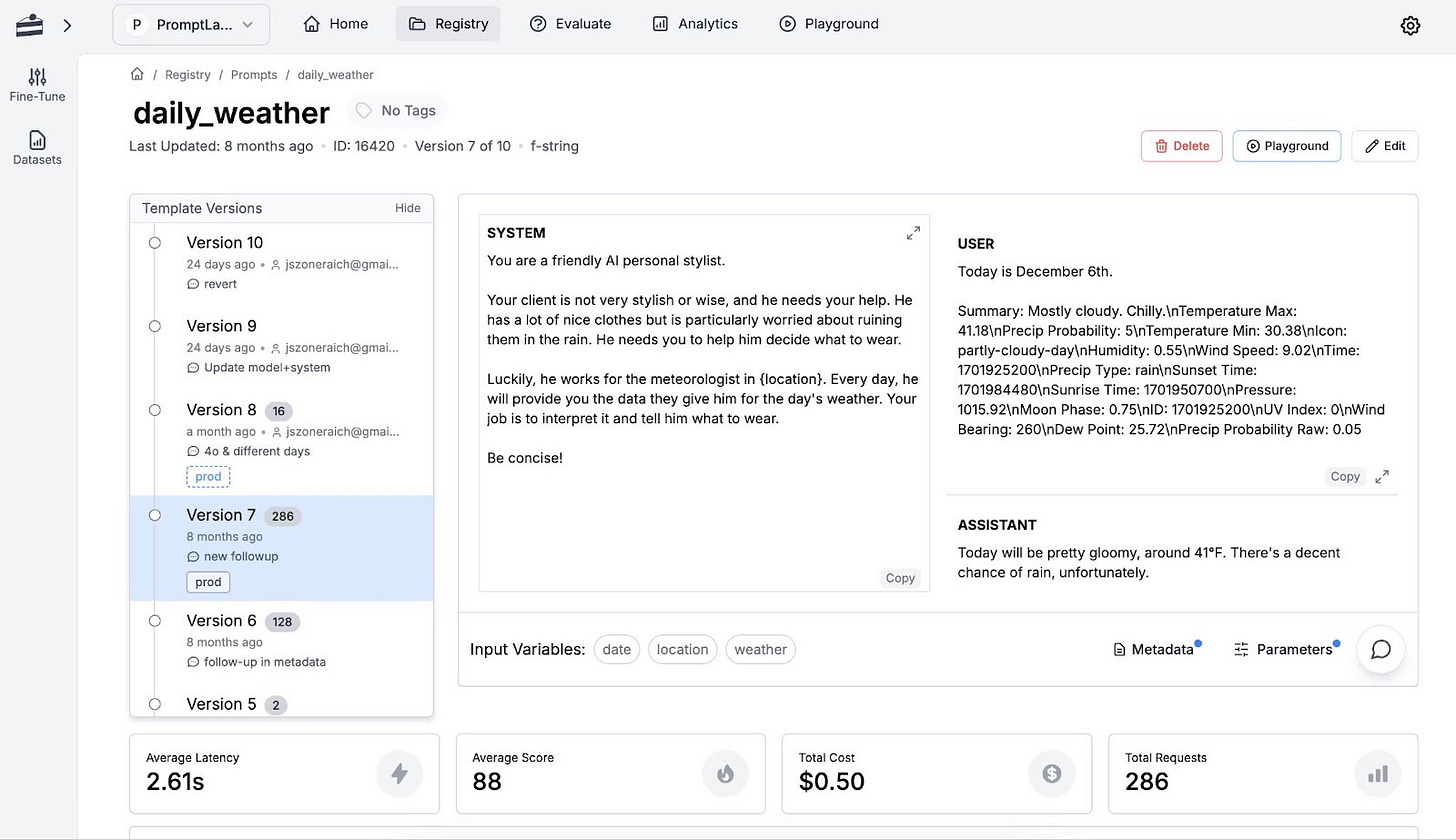

A dynamic prompt library is programmatically created and stored in a central playground like PromptLayer, where each prompt is iterated with version-controlling, deployed in your codebase or in your other tools via API, and pre-emptively evaluated based on specific performance criteria that you set based on a set of guidelines — think writing guidelines, persona criteria, etc.

Example: The Gorgias growth team uses PromptLayer to run a continuous prompt evaluation loop on their support agent, enabling them to iterate on prompts several times a day, and consistently improve their agents’ performance.

Evals close the loop, creating a self-improving system.

This 4-level model shows that the true value — and the path to crossing the GenAI Divide — is not in individual execution, but in systematic integration. It’s the difference between being an AI-Not and an AI-Native, as Brendan Short would say.

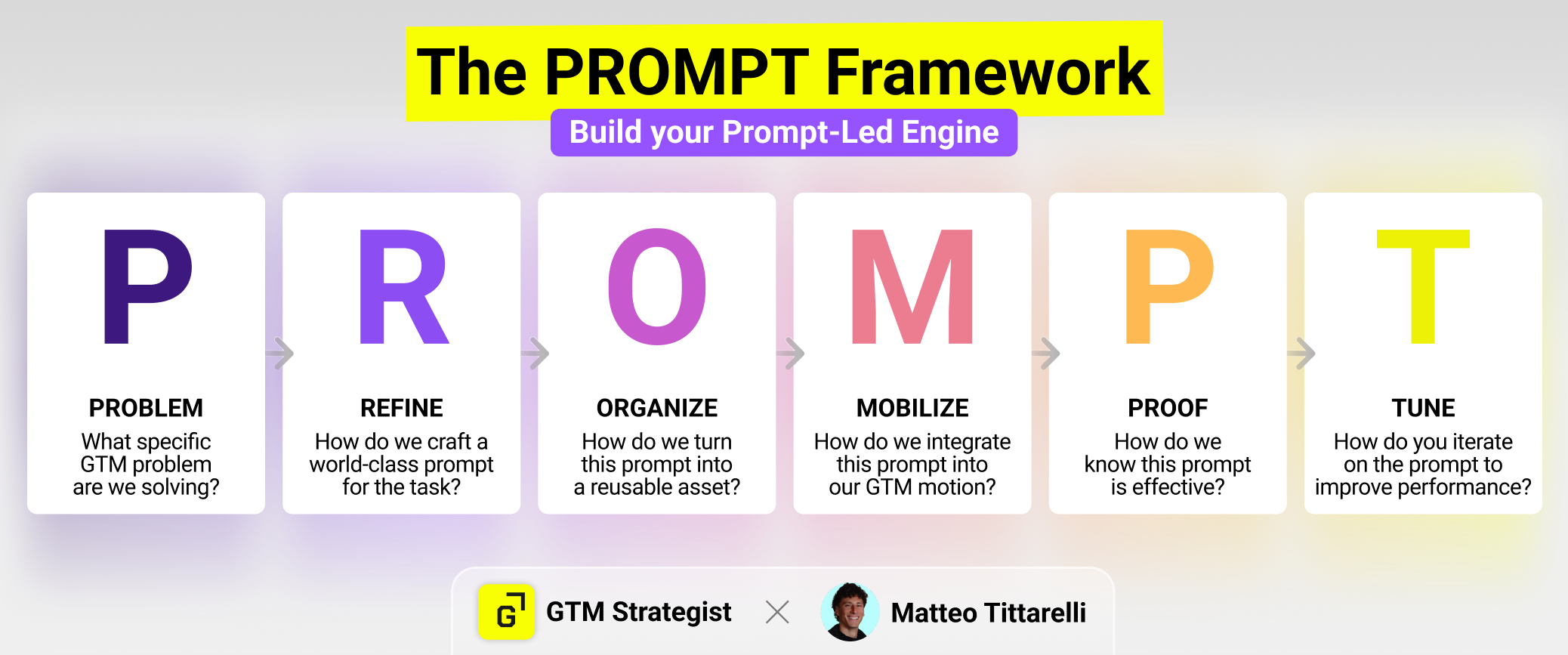

2. P.R.O.M.P.T: a Framework for Building your Prompt-Led Engine

Why does a framework like the one below work when 95% of AI pilots fail? Because it transforms prompting from a creative art into a more rigorous, technical discipline that can be iterated on. Frameworks force you to move from asking “What can this prompt do for me right now?” to “What system can this prompt power for the business tomorrow?”.

It’s a repeatable process for turning ideas into great assets — aligning multiple assets together to form a compounding growth engine.

P — Problem

Before writing a single line of a prompt, you must first articulate the specific GTM problem you are trying to solve. This critical first step forces you to move beyond vague tasks and define a clear business objective with measurable success criteria. Is this a revenue-critical prompt for personalizing outreach, or a strategic one for running complex competitor research?

Tools like WhisprFlow can be invaluable here, allowing you to capture unstructured voice notes — minus all the uhms, ahems, and other filler words! — to brainstorm and refine them into a clear, documented problem statement, which will be the raw context for your prompt.

Just keep the `fn` key pressed and show it record your voice directly into your prompt generator.

R — Refine

Once the problem is defined, the next step is to craft a world-class prompt. This is not just about writing a question or specifying a task; it’s about comprehensive briefing and expectations settings.

Because this is where most of the market on prompt engineering is exploding, we won’t spend much time discussing the best prompt engineering frameworks like COSTAR or SPICE. Instead, we’ll report relevant, condensed takeaways from one of the best 2025 prompt engineering deep dives out there — from the one and only Lenny’s Newsletter.

Few-shot prompting — providing examples into the prompt — can dramatically improve accuracy by showing the model what good looks like, often boosting performance from 0% to 90%. Adding sections in your prompts like <EXAMPLE OUTPUT> or <OUTPUT STRUCTURE> with each section of the expected outputs sets the right expectations with the AI.

Context is underrated and massively impactful when relevant background information is included in the right format and order. This is one of our favorites, especially when we need to ground the LLM in structured, deep knowledge of product, ICP, and competitors.

To generate context, you inject websites that the LLM can scrape. But the more structured and richer the context, the better the output.

If you want to take it up a notch — especially if you have a complex product or technical ICP to ground the LLM into — you can create a messaging and positioning library in the likes of Octave, export it as markdown, and re-import it in your LLM of choice as knowledge. This does wonders in grounding LLMs in useful knowledge and avoiding hallucinations.

Advanced techniques like decomposition and self-criticism unlock better performance by breaking problems into sub-problems or having models critique their own outputs. Especially good in prompt chains like fact-checking or aligning for specific writing guidelines or personas.

In this step, you can leverage Custom GPTs or a platform like PromptHub to build your prompts — and have them pull from brand kits and knowledge bases, turning a simple instruction into a more context-rich directive.

O — Organize

No matter how rich the structure of your prompts, or how much context you give them — if you use it once, it is a missed opportunity for repeated usage and improvements. To scale your PLE, you must turn prompts into reusable assets. This means storing them in a centralized repository like PromptLayer, Langfuse, or PromptHub.

This is your “GitHub for Prompts.” Here, you can visualize and manipulate your prompts and:

Apply version control (e.g., v1.2.3) to track changes in your prompts done by your teams and compare differences between versions.

Test prompts across different LLM models — OpenAI, Anthropic, Llama, etc. — to find the best fit for the task, as well as adapt prompts to any LLM model.

Create modular snippets variables tables like {company context}, {industry}, {persona}, {goal}, {format}, etc. to flexibly feed into your prompts and adapt your output to various use cases.

Organize prompts into folders and labels by GTM channel (e.g., “SEO,” “Outbound,” “Social”) or workstream.

M — Mobilize

This is where your prompts are deployed in your GTM workflows and become an active part of your GTM engine. This can be done via native HTTP API calls in tools like n8n, Clay, or Cargo, or via webhooks in tools like AirOps.

This allows you to call your prompts at scale as part of your chained workflow sequences, mobilizing your library of assets as a cohesive, automated workforce. You can also optimize model selection — Claude, GPT, Llama, Perplexity, etc. — based on pre-emptive testing.

P — Proof

An engine is useless if you can’t measure its output. In your prompt repo, you can track performance at two levels. First:

Prompt-level KPIs like accuracy, latency, credit consumption, and reuse rate.

Portfolio-level business metrics like the lift in conversion rates, reduction in CAC, or direct impact on revenue.

Tools like PromptLayer, Langfuse, and PromptHub can provide some of the more basic analytics out-of-the-box, while the observability needed to connect prompt performance to tangible business outcomes might require some more custom

T — Tune

The final step is to close the loop. Based on the performance data you’ve gathered, you can now systematically improve your prompts. This involves running A/B tests on different prompt variations, using a mix of Human Evaluation (e.g., paired comparisons) and Automated Metrics (e.g., cosine similarity).

For scalable feedback, you can even use the LLM-as-Judge technique, where another language model grades the output of your prompt. This continuous tuning process, managed within platforms like PromptLayer, is what turns a static library into a self-improving engine.

3. Prompt-Led Engine in Action: 2025 Real-World Examples

Theory is great, but execution is everything. Here are two real-world playbooks that show how the P.R.O.M.P.T. system works in practice, from scaling an AI-native agency to automating enterprise customer support.

Example 1: GrowthX.ai — the AI-native agency engine

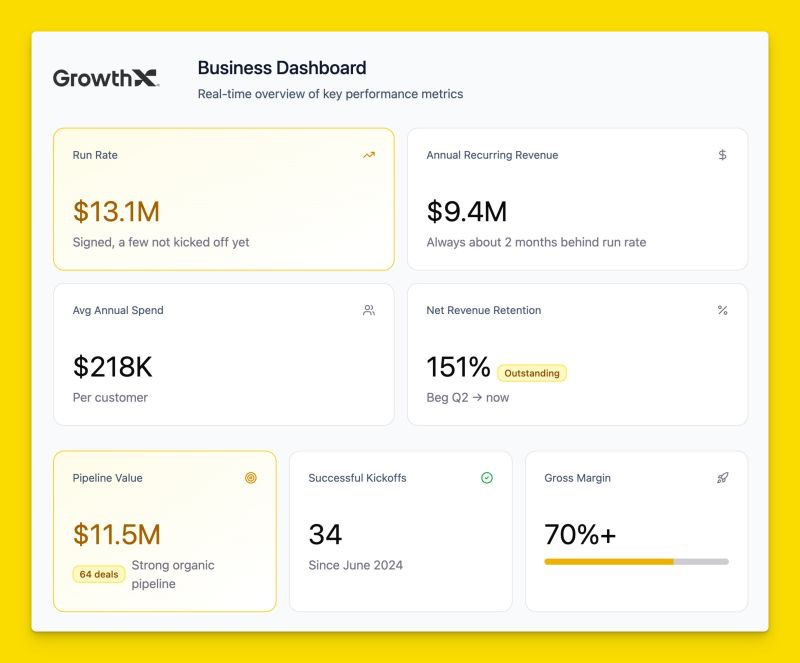

What if you could deliver high-end GTM services with lower costs and healthier margins than a SaaS company? That’s the question Marcel Santilli and GrowthX AI answered by building their entire business on a foundation of AI and system-integrated prompts.

Their core problem was a classic service agency challenge: how to scale expert-led content and GTM strategy for demanding clients like Webflow and Ramp without linearly scaling headcount and trading off margins.

Instead of hiring an army of consultants, Marcel and his team built a proprietary “Content OS” — a system of work where expert strategists guide and refine AI-powered workflows. This was never just about scaling content production, but about architecting the first delivery model where every client workflow is a versioned, managed asset. The service is packaged as “AI Service-as-Software,” deeply integrating prompts into the core business logic.

The results, tracked on a real-time dashboard by Marcel are staggering: a $13.1M run rate, 151% NRR, and 70%+ gross margins. GrowthX AI proves that a Prompt-Led Growth engine isn’t just a productivity hack; it’s the new frontier of expert-led, AI-powered service businesses that are both efficient, scalable, and high-quality.

Example 2 — The Enterprise Support Automation Engine

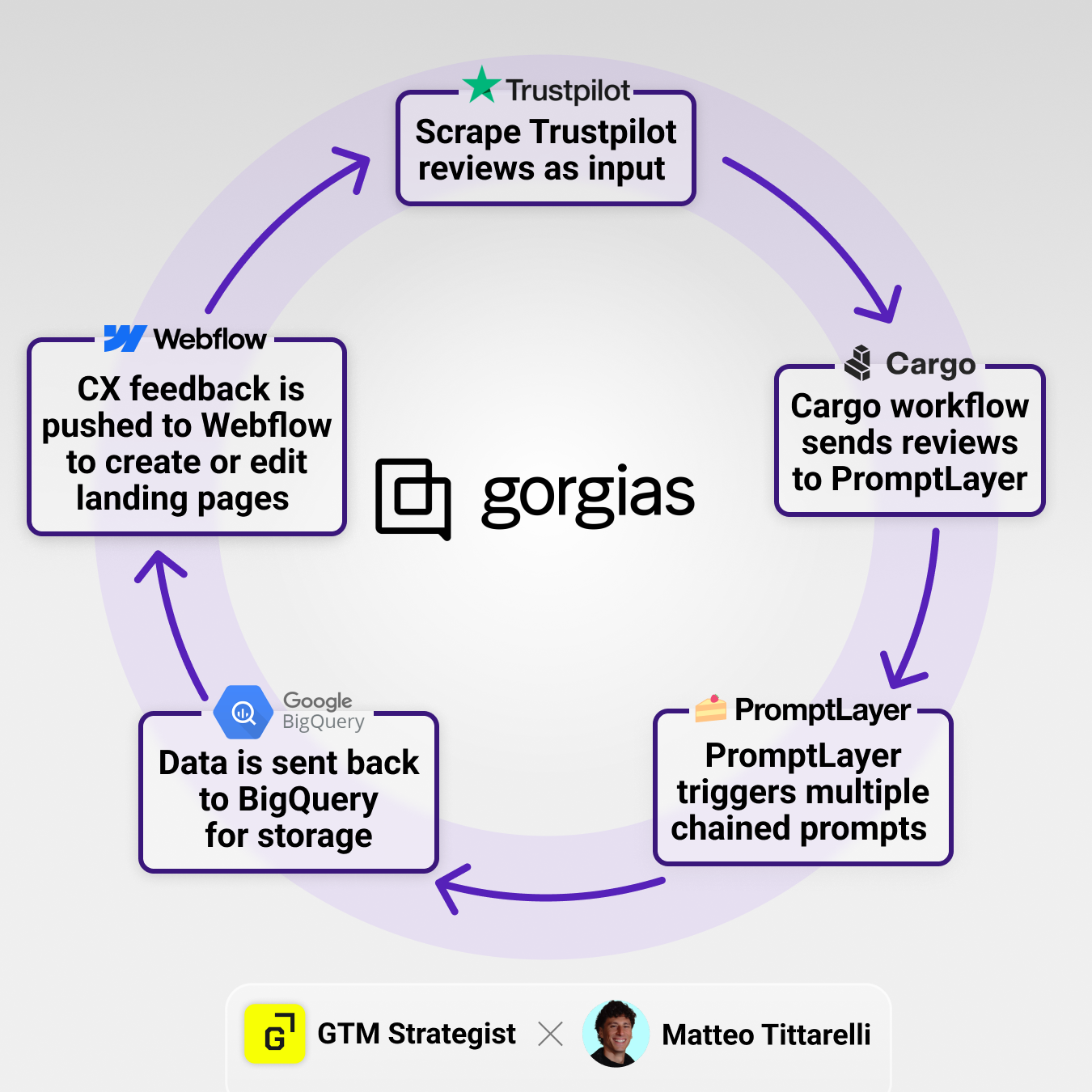

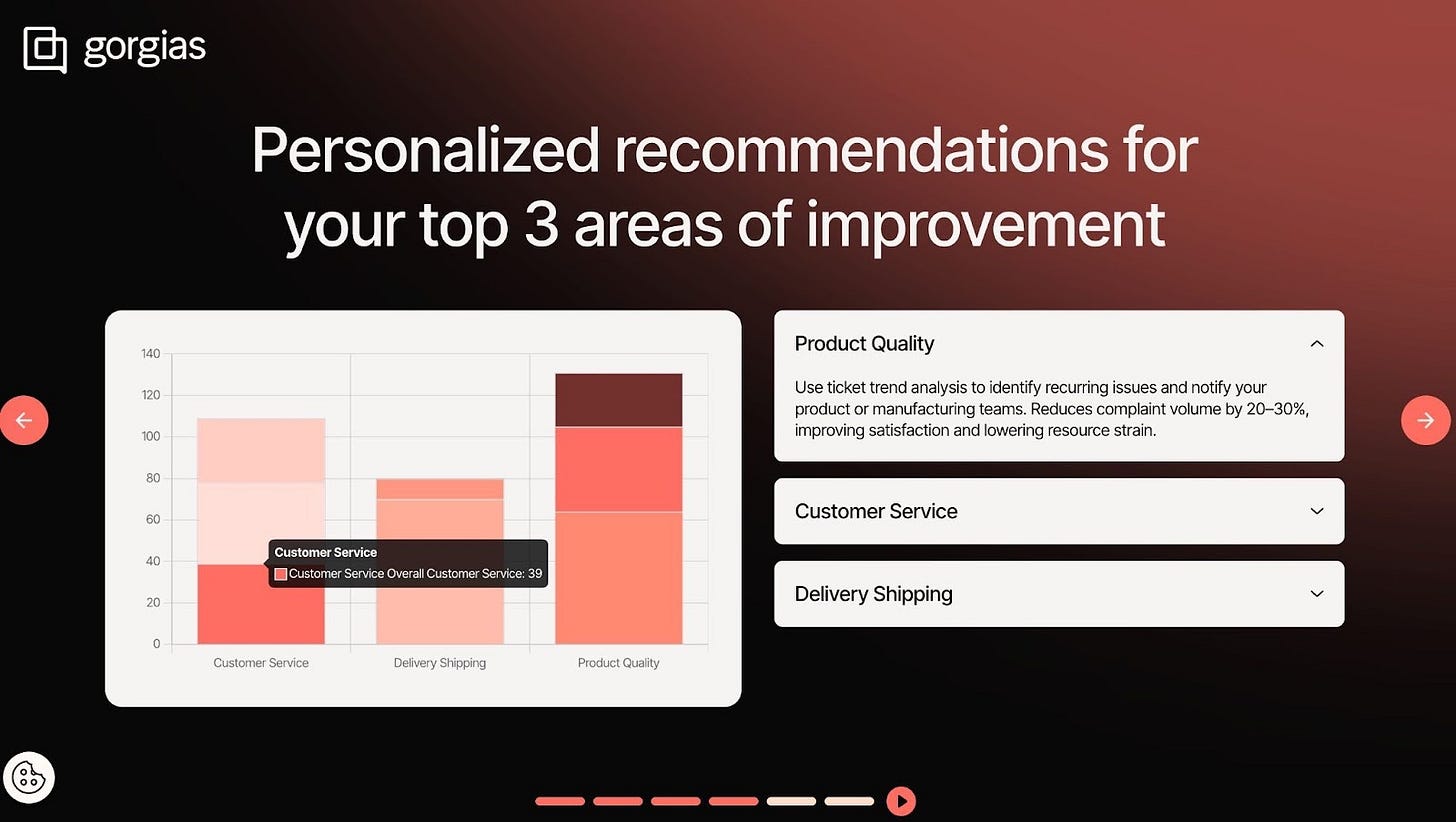

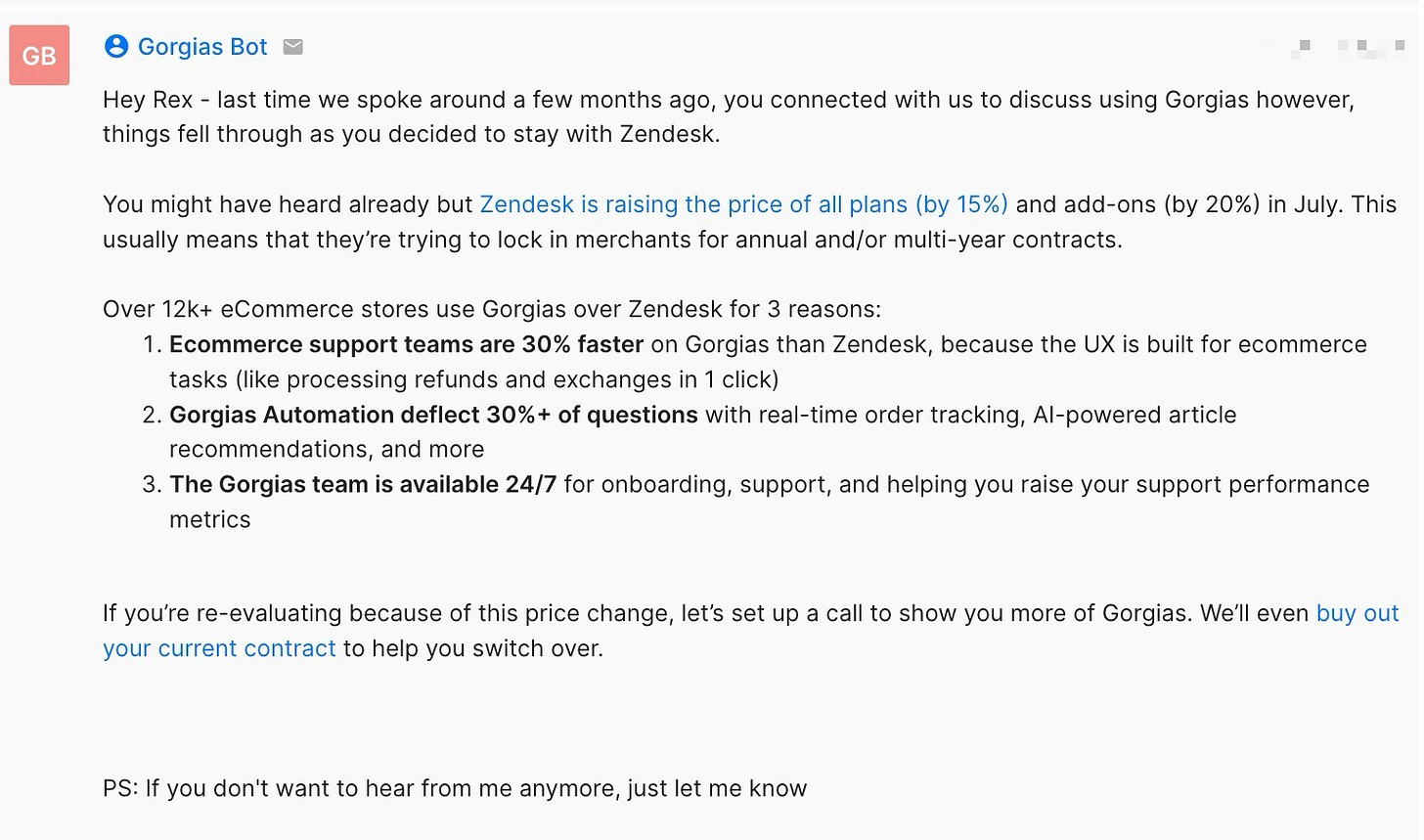

For a company like Gorgias, the conversational AI serving 16,000 Shopify merchants, the GTM problem was clear: how to automate a significant portion of their GTM workflows (spoiler — not just outbound!) without adding more headcount or missing on personalization potential.

When I asked Max, who leads the Growth team at Gorgias, his answer was simple — “a relentless focus on GTM Engineering”. Their process is a masterclass in the P.R.O.M.P.T. system — running a tight feedback loop between multiple input sources, Cargo as a workflow builder, and PromptLayer as a prompt repo and agent builder.

For example, some of their workflows they shared include:

1) Automating CX feedback based on customer reviews.

First, they scrape Trustpilot reviews as input.

Then, a Cargo workflow sends reviews to PromptLayer.

PromptLayer triggers multiple chained prompts that respectively

Analyze and categorize feedback by sentiment

Prioritize top feedback for each CX category

Generate CX recommendations based on detected issues and ties them to Gorgias product features.

Data is sent back to BigQuery for storage and further analysis.

Upon manual activation, some CX feedback is pushed to Webflow to create or edit landing pages.

2) Scaling personalized resurrection campaigns

First, the team aggregates Gong calls, transcripts and context from email threads, events attended, website visits, and email replies into a table to feed to the agents.

Then, a PromptLayer agent is triggered to create a structured summary with contextual insights, including things like:

Pain points and challenges

Goals for the upcoming year

Gorgias aha moment

Value prop pitched by the AE

Reason for closed-lost

Another PromptLayer agent pulls relevant product to craft relevant messaging

First, it extracts relevant product updates since last interaction

Then, it crafts personalized resurrection messaging including recent product updates

Then, the messages are pushed to an Instantly campaign and sent to the prospect

3) Automating events follow ups

First, an agent extracts events details and engagement history from Salesforce.

Then, a PromptLayer agent is triggered to enrich context data with

structured recommendations

Compare company’s competitors with app partners they haven’t integrated yet

Identify colleagues who’ve previously engaged with Gorgias

Based on the context, another agent writes an email sequence in Promptlayer

Another Promptlayer agent pulls relevant product to craft relevant messaging

Then, a Slack notification is triggered for the Partner Manager to review the message and act accordingly.

Prompts are stored and version-controlled in PromptLayer, enabling a 10-person team to make over 1,000 iterations and create 221 datasets in just 5 months. In these workflows, the Gorgias growth team runs evaluations with LLM-as-a-Judge, and performs backtesting to prevent regressions. This allows them to iterate on prompts “10s of times every single day.”

This was achieved by making prompt engineering a systematic, measurable, and scalable process. And it shows that prompt engineering and evals are still very niche and technical use case that are more common in engineering teams, but it also has GTM (engineering) applications and it has potential to become a source of real operational GTM alpha.

Your next GTM Engine is Prompt-Led

The GenAI Divide is real — and it’s widening. While 95% of companies remain stuck in Level 1 prompt anarchy, the top growth operators are building durable, compounding advantages with systematic, Prompt-Led Engines.

As we’ve seen from the examples from GrowthX and Gorgias, the difference isn’t about having more people — or better prompts! — it’s about having a system where prompts are observed, evaluated, and refined as strategic IP assets.

Crossing the divide from the 95% to the top 5% doesn’t require you to boil the ocean. It starts with a single, deliberate step. Your task for next week is simple:

Assess your maturity: Where does your team really sit on the 4-level model? Be honest.

Pick one problem: Identify one high-impact, repetitive GTM task that’s still being done manually.

Apply the framework: Take that single problem and walk it through the P.R.O.M.P.T. system. Define the problem, refine a single prompt, and organize it in a central place.

That’s it. That’s how you lay the first brick. The journey from a 1x to a 100x engine isn’t a leap — it’s a series of deliberate, systematic steps.

Now, go build your engine.

Want to work with Matteo on AI-enabled Messaging and Positioning, Landing Pages, Launches, and Content? Book a call with him here.

Or want to learn how to build AI workflows or prompt-led engines like the one described in the article? Join Matteo at the GTM Engineer School to learn to build AI workflows with top experts from Octave, Clay, AirOps, and Cargo. Sign up for the next cohort waitlist — Matteo is planning the next cohort for February 2026 (dates TBC), and get all the latest news on the GTM Engineer School newsletter.

📘 New to GTM? Learn fundamentals. Get my best-selling GTM Strategist book that helped 9,500+ companies to go to market with confidence - frameworks and online course included.

✅ Need ready-to-use GTM assets and AI prompts? Get the 100-Step GTM Checklist with proven website templates, sales decks, landing pages, outbound sequences, LinkedIn post frameworks, email sequences, and 20+ workshops you can immediately run with your team.

🏅 Are you in charge of GTM and responsible for leading others? Grab the GTM Masterclass (6 hours of training, end-to-end GTM explained on examples, guided workshops) to get your team up and running in no time.

🤝 Want to work together? ⏩ Check out the options and let me know how we can join forces.

This was super helpful! It feels like we're quickly navigating towards a default space where LLM-based systems automatically optimize your prompts and utilize a backend system of well-organized, effective prompts. The era of having users keep track of things like this in Google Docs is ending soon.