How to Optimize B2B Websites in 2026: 3 Winning Patterns

Real-world data from top SaaS companies - stop guessing, start engineering higher conversion rates

This newsletter is kindly supported by Attio - the AI-native CRM built for modern GTM teams. If you’ve ever battled a rigid legacy CRM, Attio fixes that with clean data, real-time visibility, and workflows that actually speed you up.

Attio just launched Ask Attio - and it transforms how you work with your CRM. Instead of clicking through records and reports manually, simply ask questions in natural language. Search, update, and create in your CRM with AI.

Dear GTM Strategist!

“Let’s A/B test it.” It is one of the most expensive phrases in GTM. Usually, it translates to: “I have an opinion, and I want to waste 4 weeks of engineering time to prove I am right.”

While most marketers are busy arguing over button colors or hero headlines, the top 1% of B2B companies are playing a different game.

They aren’t guessing. They are running thousands of experiments to find the exact elements that drive revenue.

But here is the problem. You likely don’t have the traffic of Amazon or the engineering resources of Adobe. You cannot statistically validate every hunch.

So, should you just guess? No. You should use GTM Intelligence.

This is why I love the approach of Casey Hill (CMO, DoWhatWorks). He doesn’t rely on “best practices” or opinions. He relies on surveillance.

His team tracks public data across millions of sites. They watch what companies like GoDaddy, Webflow, and Cisco test. And they wait.

If a variation survives for 3+ months? That is a winner. If it disappears? It failed.

This is “Survival of the Fittest” data. It is the closest thing we have to a cheat sheet for high-converting layouts without burning your own budget to find them.

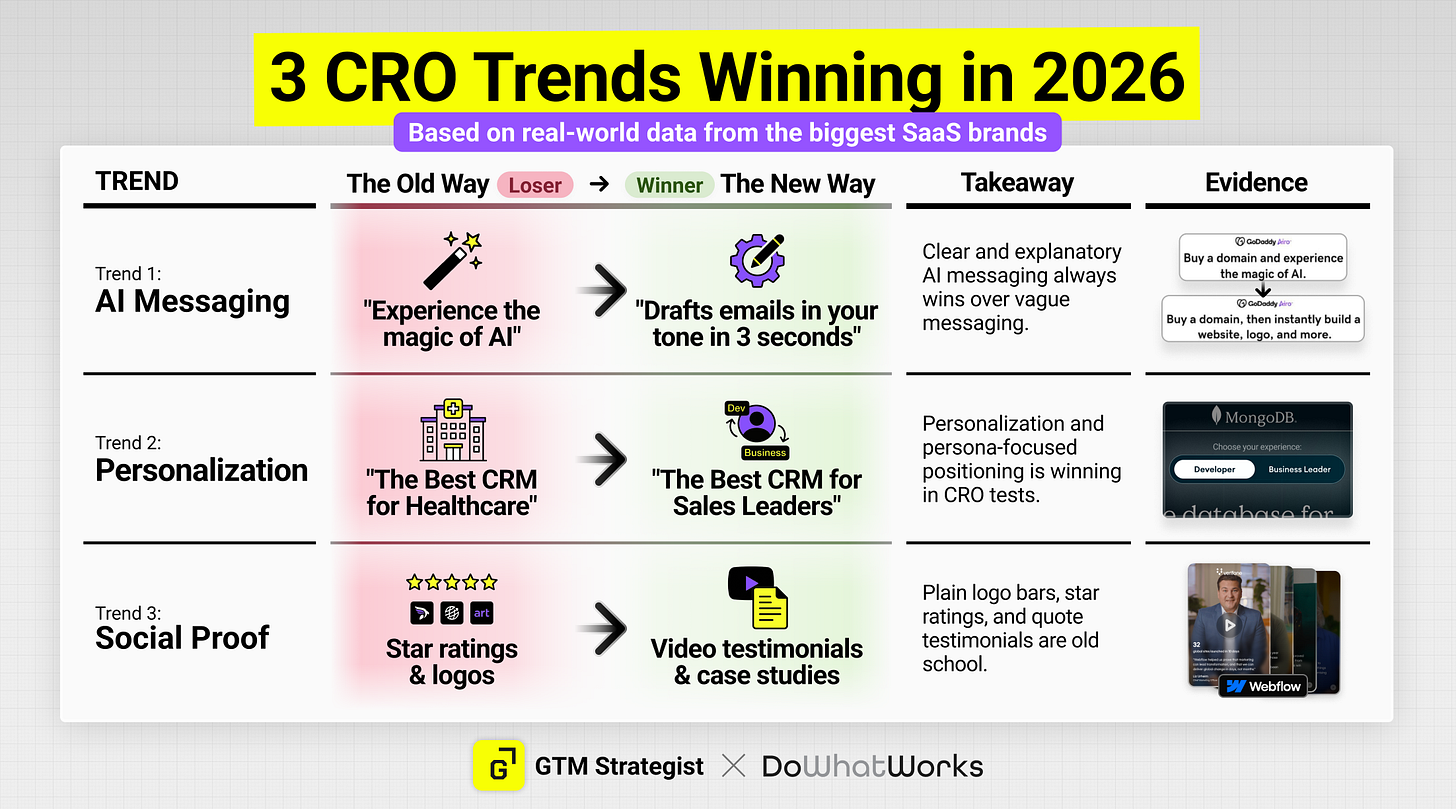

I invited Casey to share the top 3 patterns emerging from this massive dataset for 2026.

What you’ll learn:

The 3 “Sanity Checks” you must pass before you run a single experiment (most companies fail #1).

Why “AI Magic” is losing and what specific messaging is actually converting buyers right now.

The “Cost Signaling” shift: Why easy social proof (stars/logos) is dead and what is replacing it.

CRO should not be about opinions. It should be about engineering context that converts.

Now, let’s look at the data with Casey.

In today’s piece, we are going to talk about some of the emerging trends and key areas to focus your A/B testing in 2026.

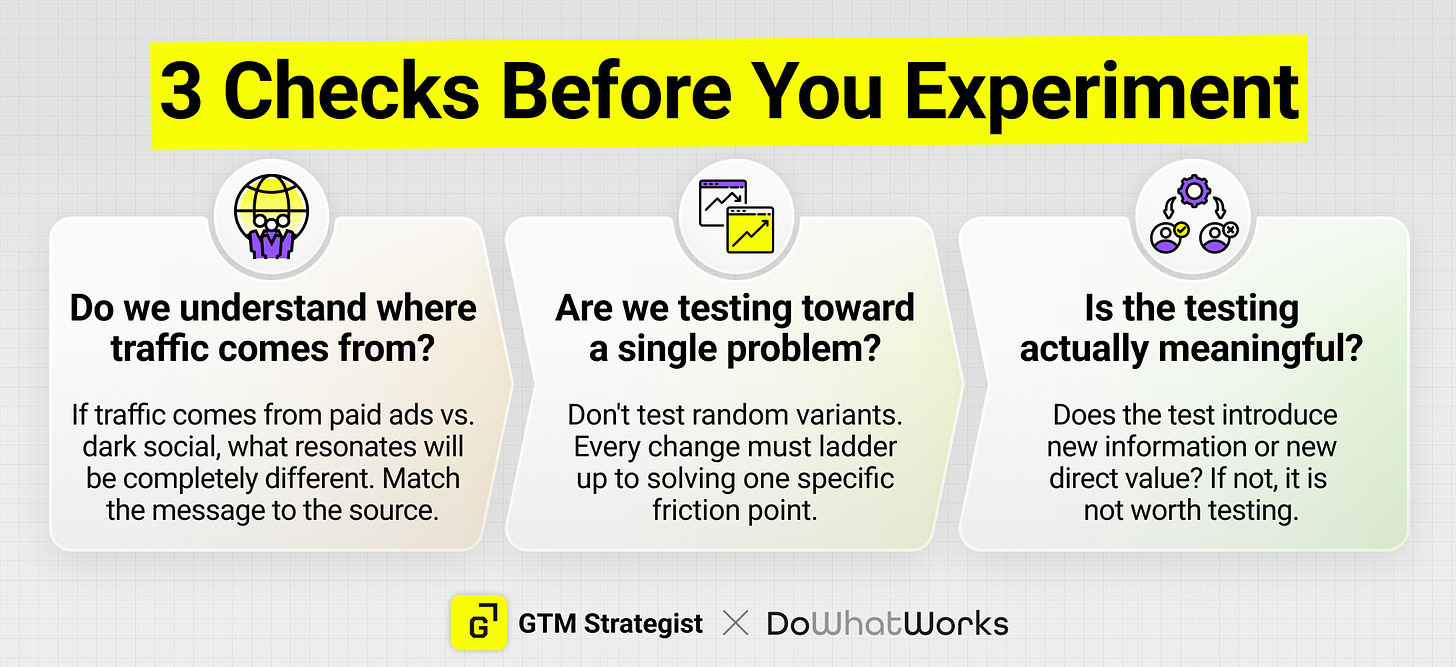

But before we do, there are three key checks you want to look at before you begin testing.

Match traffic source to positioning / messaging. At DoWhatWorks, we drive a lot of our business from content and social channels, and as such, knowledge of exactly what our product does when leads hit the site is relatively low (versus say a retargeting ad pushed to free trial users). As such, we need to not only explain how the tech works and show lots of examples, but the visuals of showing the software platform are key to avoid misunderstandings (ie, we are not an agency).

With our clients, we like to advise the use of what we call a UVS (unified variable set). What it means is you start with a focused objective (say, reduce complexity on your pricing page) and every change you make should fold back up to that objective. So you might reduce the number of line items in plans, you might make sure there is just one primary pricing progression metric, you might simplify the visual real estate of the page by removing anything above the core plans, etc. But with this in mind, you wouldn’t just add a quiz below because it might be helpful, if it is not specifically focused on reducing complexity.

Are you testing something that will be meaningful? I use a litmus test that I call the “meaningful test” framework.

I define this principally as a test that does one of two things…

Do you introduce new information?

Do you introduce new direct value?

Let’s start with what is not a meaningful test…

❌ Testing button text of “Book a Demo” vs. “Get Demo”

❌ Testing a headline of “AI-Powered insights that make your team superheroes” vs. “Product. Engineering. Marketing. All aligned thanks to AI-powered insights”

❌ Testing a subheader of “Free forever” vs. “Free version.”

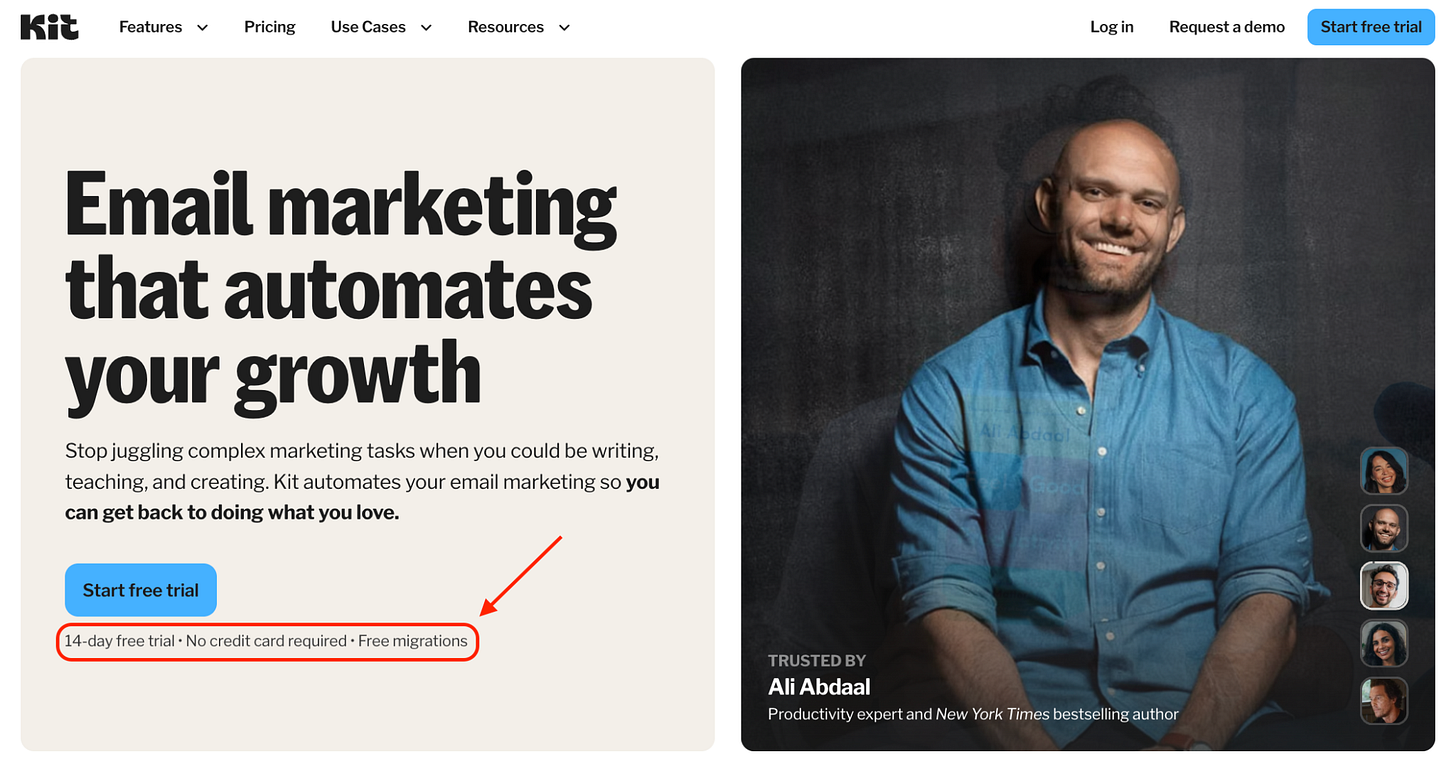

On the flip side, here is reassurance subtext from Kit that checks the “introduce new information” bucket.

They tested subtext that…

Clarifies how long the trial is

Clarifies that no credit card is required

Lets you know they offer free migrations

That is a great example of adding new information in your test.

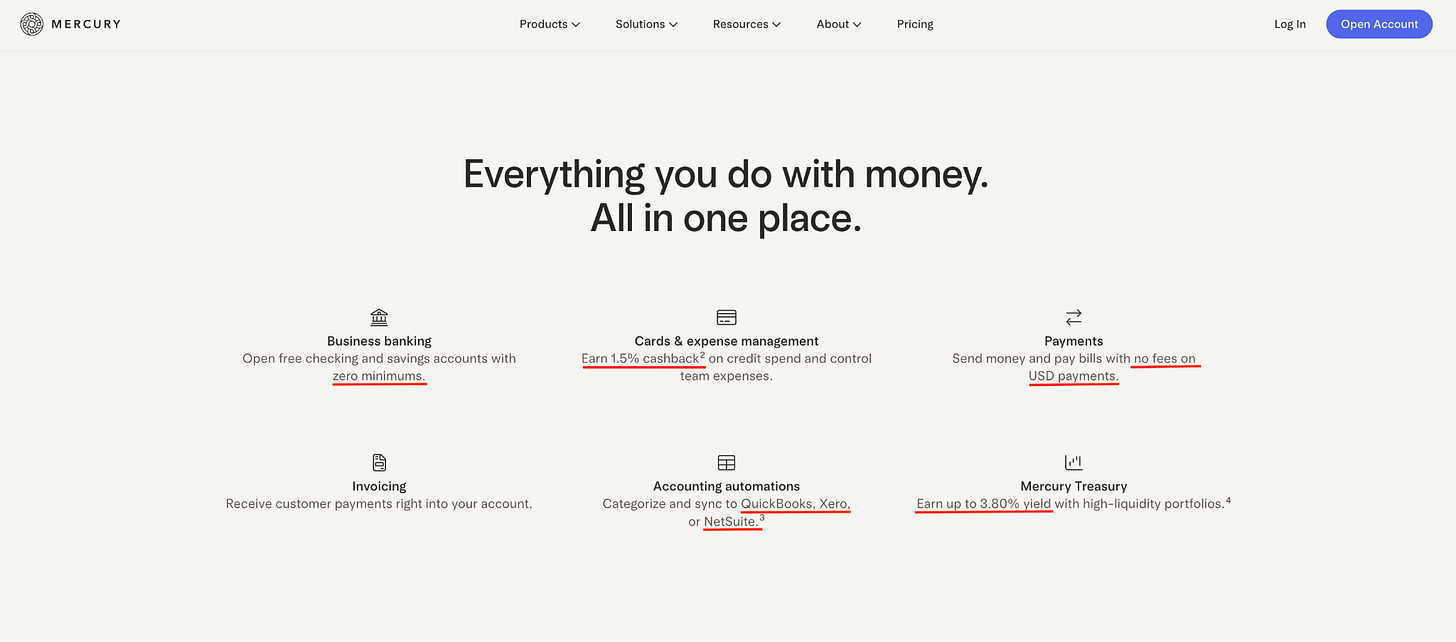

Mercury testing into adding specific numbers into their subheaders is another great example of introducing new information…

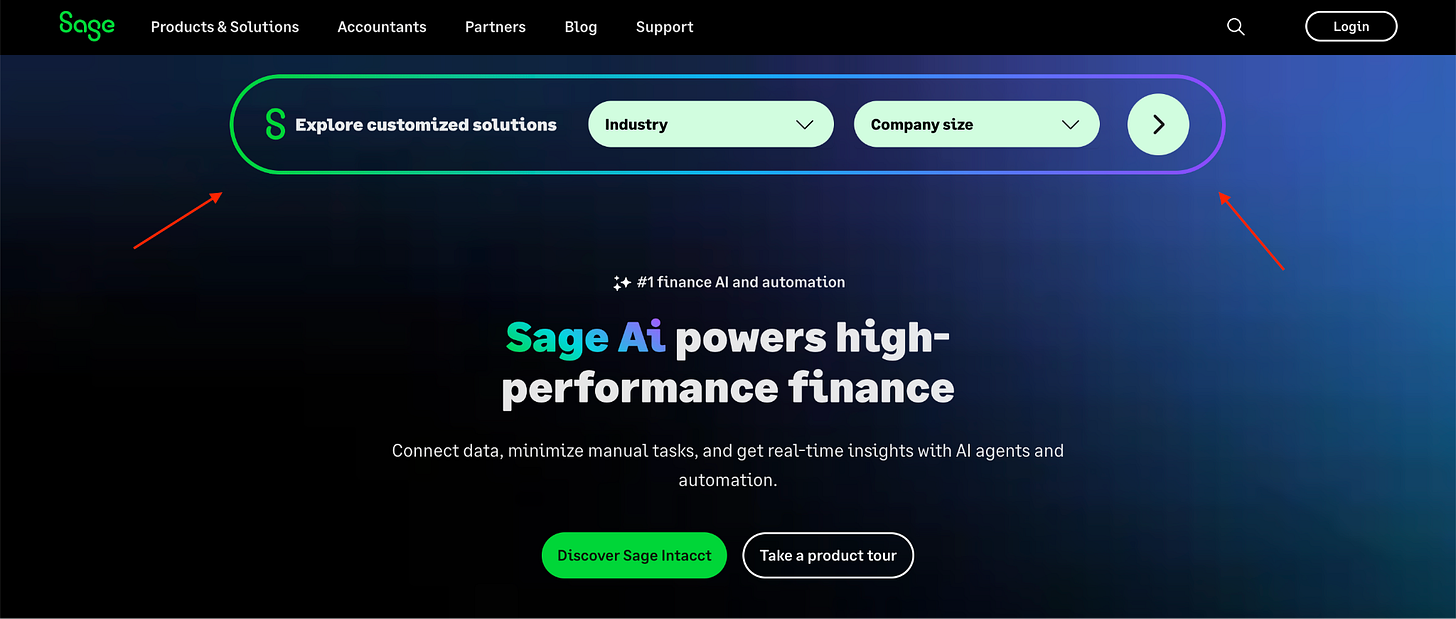

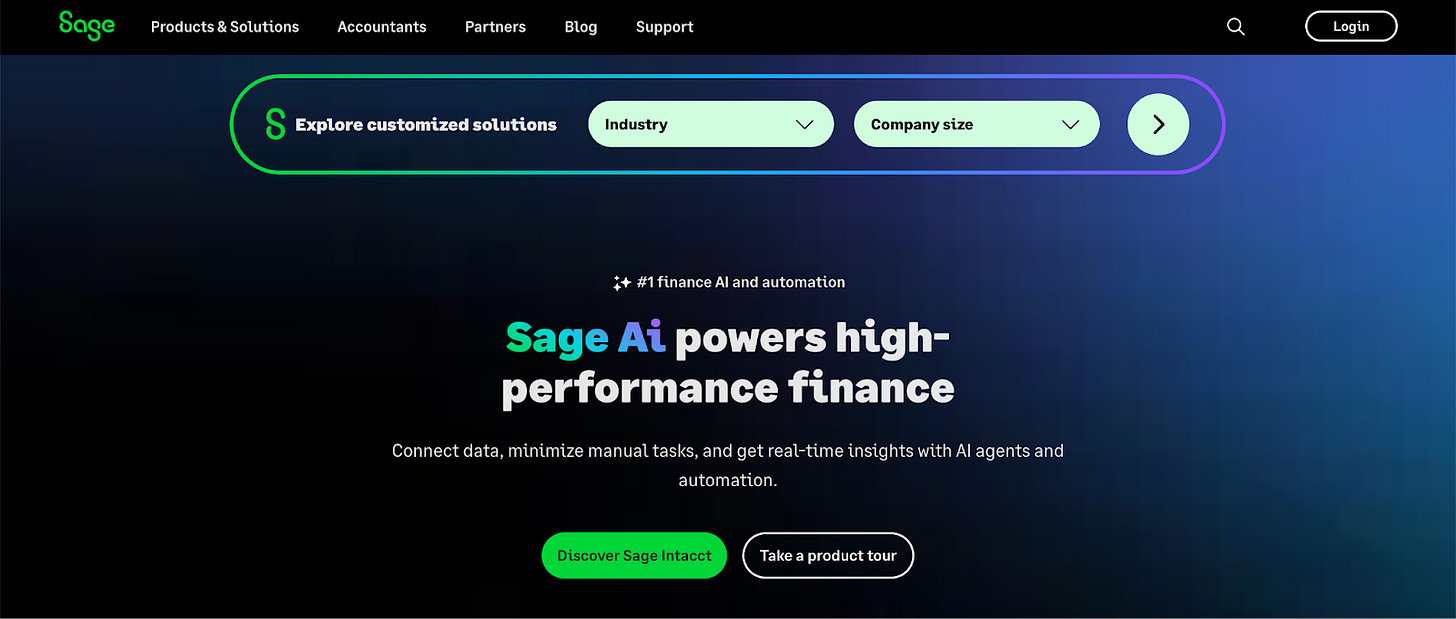

Then, with Sage, you see an example of adding new direct value with an experiment…

They tested into adding a bar at the top of their site where you can add your industry and company size, and then you get redirected to a homepage specifically tailored to that industry and those pain points (I tried it for SaaS, and it was great).

Now, of course, you can run copy tests that don’t check one of the two boxes above, and they can still be successful. But this is a great way to improve your hit rate.

Now, let’s look at a few trends for 2026…

1) AI has a generic problem. Specificity in capabilities is the answer.

There is a major problem across AI companies selling agentic products.

They are unbelievably vague in their messaging.

I curated a list of 30 top companies selling agents.

Here are the headlines…

Company A: “We are a frontier research and deployment company providing enterprises with a powerful, cost-efficient, and easy-to-adopt technology to integrate agentic AI directly into their core workflows and processes.”

Company B: “Deploy, orchestrate, and govern fleets of specialized AI agents that work alongside your team”

Company C: “Ops teams can build and manage an entire AI workforce in one powerful visual platform.”

Company D: “[company] is the simplest way for businesses to create, manage, and share agents.”

It took me until company eight on the list that I got one that actually gave me any real information about what it did.

Company H: “Docket’s AI agent handles qualification, discovery, and booking while you sleep. Teams see 30%+ more qualified pipeline from the same traffic.”

I understand these brands want the optics of addressing a large TAM, but when you can go to ten sites, and the headlines are almost identical, this isn’t providing any clarity or insight that is helping prospects decide on your product.

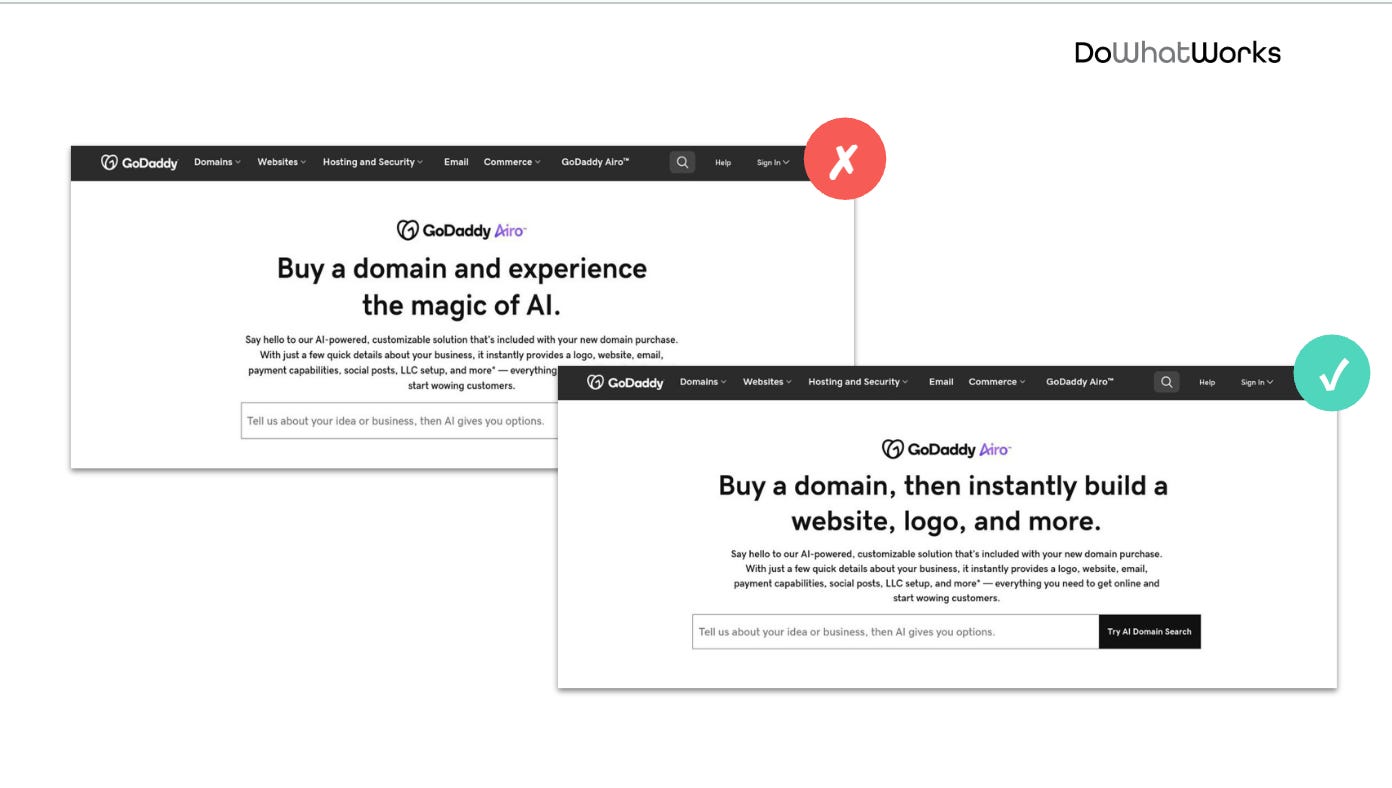

What does the data say 💡: I looked at DoWhatWork’s database of hundreds of A/B tests around AI messaging. Tests from brands like OpenAI, IBM, Hootsuite, GoDaddy, Notion, and beyond. Consistently, vague AI positioning loses, and more tangible, capability oriented language wins.

Here is a test from a GoDaddy sales page that perfectly illustrates this.

The takeaway is clear → AI messaging that is clear and explanatory to the capability it solves for (i.e. our support agents provide 24/7 ticket coverage in 42 languages) dramatically outperforms vague or sweeping messaging.

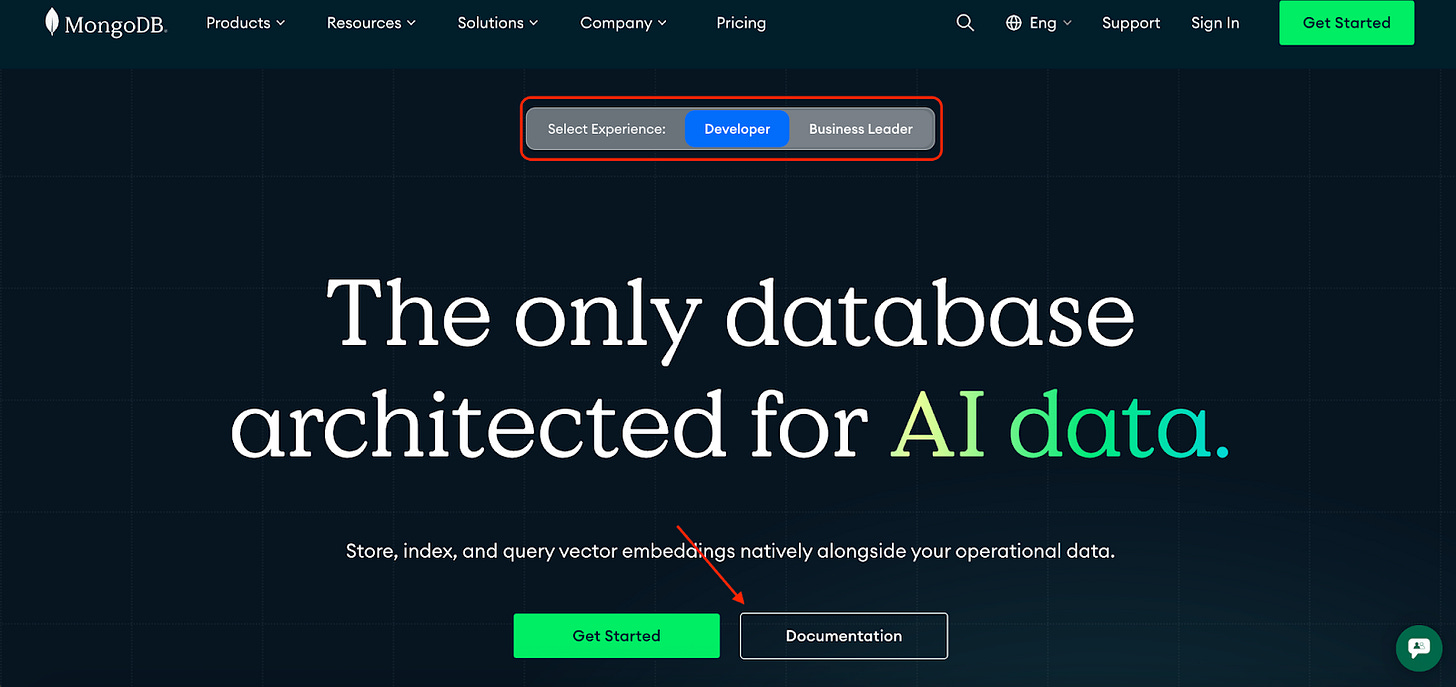

2) Persona-based personalization is winning

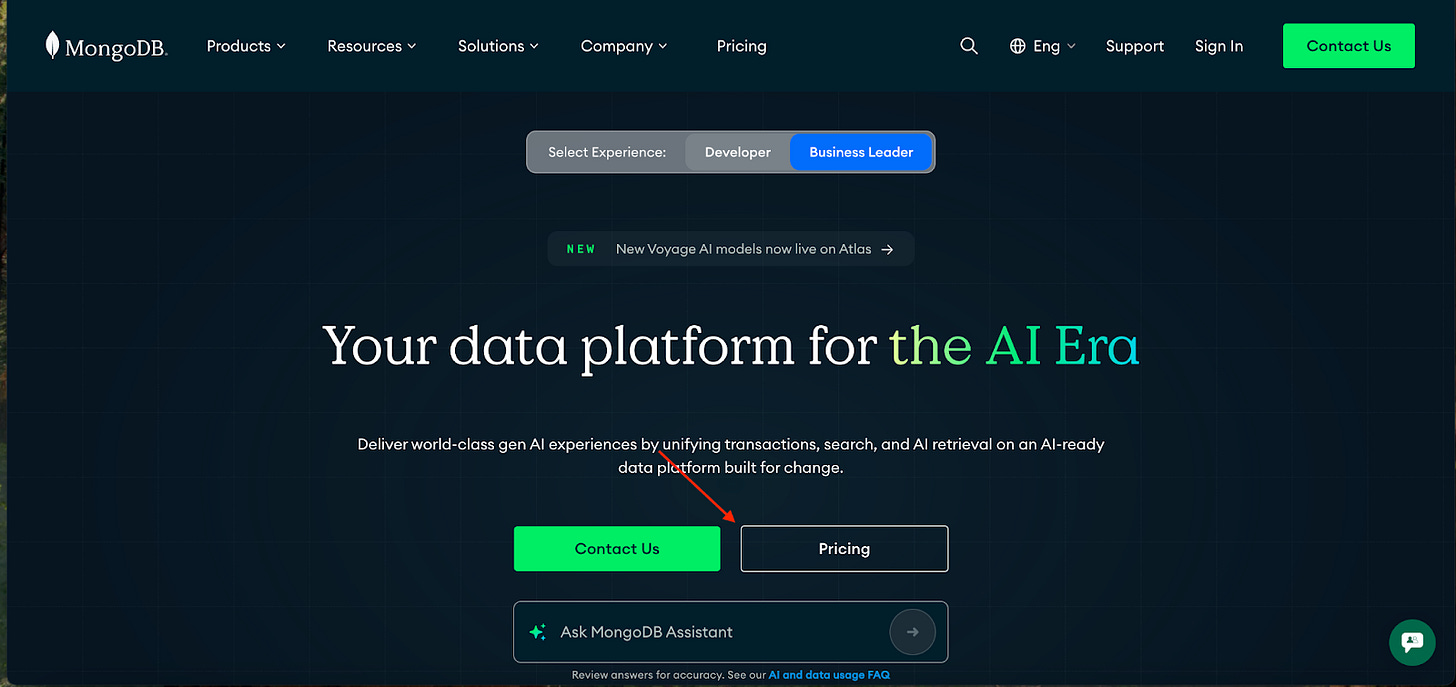

In the past few months, we have seen many of the top SaaS/Fintech brands like MongoDB and Sage) test into personalization options at the top of their homepages.

For MongoDB, they have an “experience” selector at the top that’s tied to their two main ICPs: Developers and Business Leaders.

When you choose one, it completely changes the sections, the copy, and even the CTAs.

For example: in the hero, if you’re a developer, you can jump straight into the documentation. But for business leaders, that hero CTA becomes Pricing instead.

Sage, a popular fintech company, also has a similar implementation, but they customize the page based on industry/size vs. role.

Either way, this is the future. Provide customized and relevant information without intrusive pop-ups or making folks jump through multi-step forms.

Most websites bury the “who this is for” affinity, or the “how it works for XYZ persona” section way down the page (I’m guilty of this myself).

What does the DoWhatWorks data say? 💡Looking at hundreds of tests around personalization and persona-focused positioning, I consistently see these variants win (over outcome-focused copy and many other approaches).

Especially for enterprise brands selling to complex buying committees with very different needs (developers, legal, marketing, CFO, etc.), this makes the experience exponentially more relevant for the prospect.

Imagine the gap between what a developer wants to see and what a VP of marketing/sales/finance would want to see. Night and day.

3) The bar for what builds trust with social proof just went up.

Going through our test database at DoWhatWorks, I noticed something interesting.

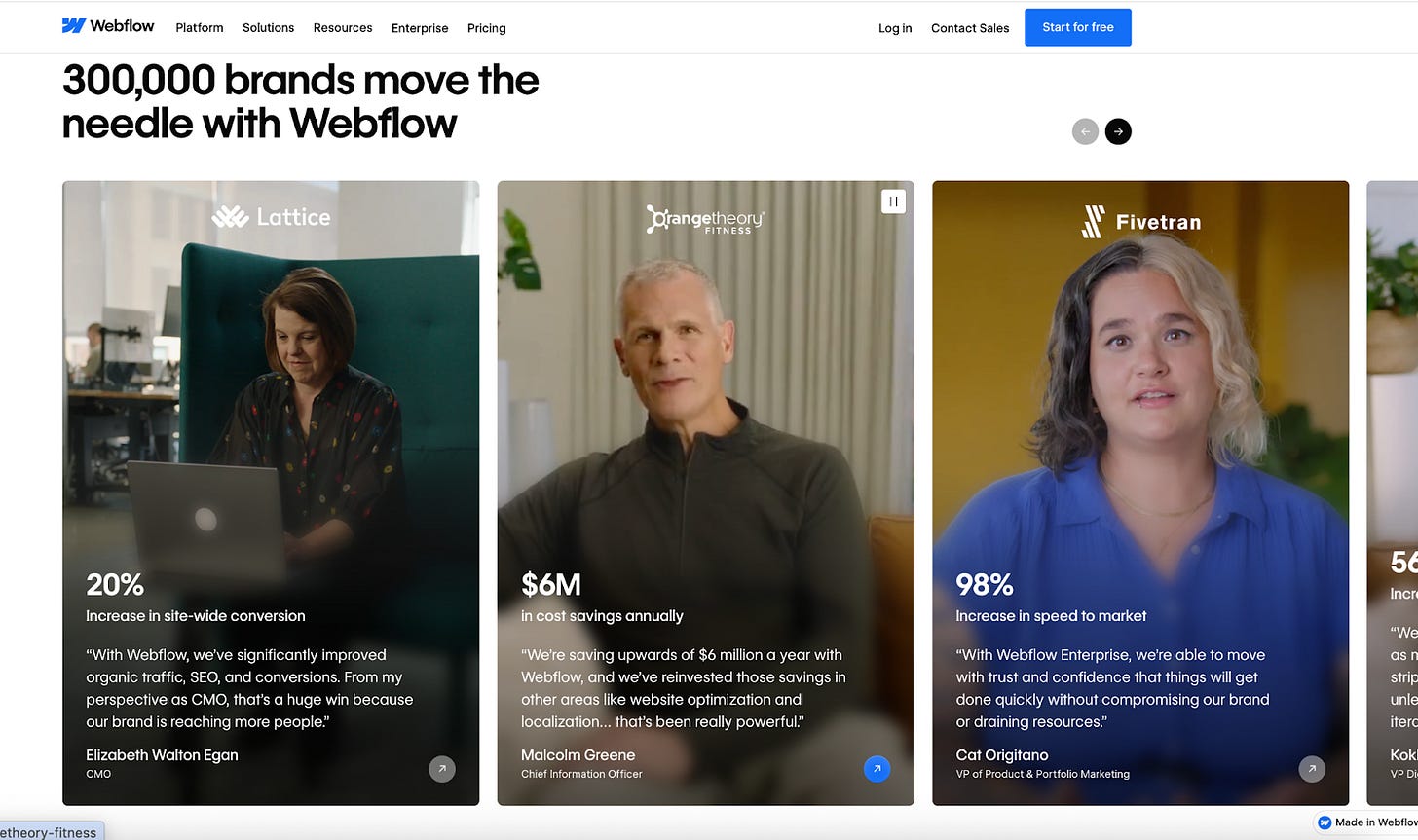

Why are logo bars + quote testimonials losing, but case studies + video testimonials are winning on websites?

I recently sat down with Ethan Decker, biologist turned marketer, who talked through the science behind some findings around social proof.

He explained that “cost signaling” might be at play here.

The idea comes from nature, where an animal like an elk spends a lot of energy and resources to build impressive antlers. It’s a signal that can’t be easily copied by a less genetically fit elk.

In B2B, we often see that plain logo bars, star ratings, and quote testimonials lose in A/B testing.

One reason might be that these signals are easy to fake. Anyone can put down any logo on their site, or pull a quote from an imaginary source (or a past customer from 7 years ago).

Meanwhile, if you have a video testimonial of an executive at Orangetheory (like Webflow does) talking about your product and the benefits, that gives you a lot more credibility. Ramp does the same thing.

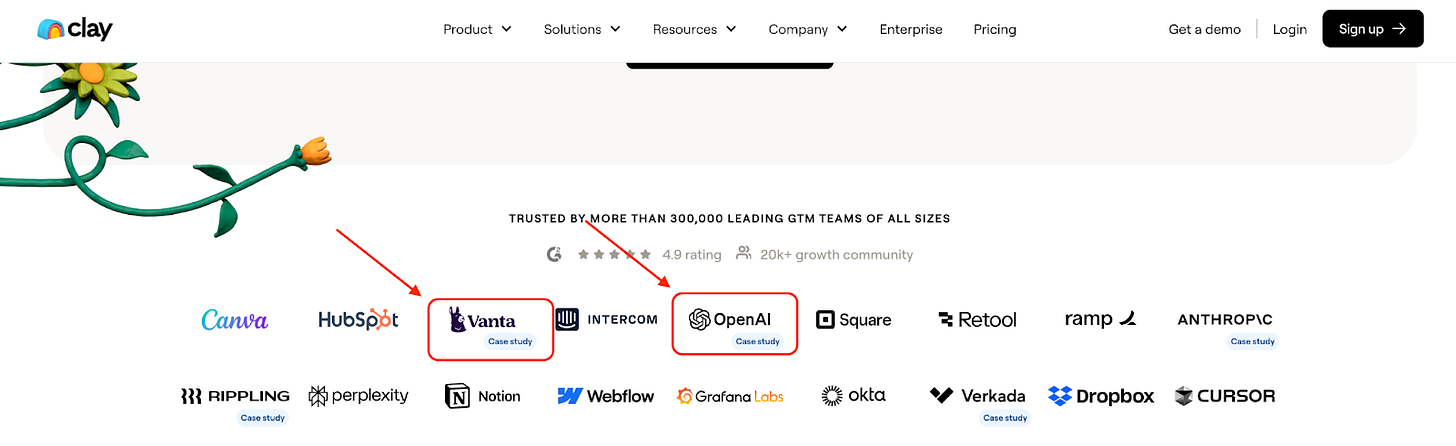

Or Clay has logos from OpenAI and Google linked to case studies. Now the logos can be validated.

Bottom line is: The easier it is to fake, the less credibility it carries.

How to apply this (even if you aren’t Adobe)

You might be thinking: “I don’t have millions of visitors. Can I still test this?”

1. Consistency > Volume

You don’t need massive traffic to get signals. You need consistency. If you have 1,000 visitors a month coming from a consistent source (e.g., SEO), you can run valid tests. If you have 10,000 visitors but they come from random viral spikes one month and paid ads the next, your data will be noisy. Focus on your stable channels.

2. Don’t forget mobile

B2B buyers are on their phones, too. When we analyze tests at DoWhatWorks, we look at both desktop and mobile versions. The good news? The patterns are typically mirrored (though not always). However, for every trend shared in this article, the mobile versions were tested and produced the exact same results. So yes, these specific strategies work on the small screen too.

✅ Need ready-to-use GTM assets and AI prompts? Get the 100-Step GTM Checklist with proven website templates, sales decks, landing pages, outbound sequences, LinkedIn post frameworks, email sequences, and 20+ workshops you can immediately run with your team.

📘 New to GTM? Learn fundamentals. Get my best-selling GTM Strategist book that helped 9,500+ companies to go to market with confidence - frameworks and online course included.

📈 My latest course: AI-Powered LinkedIn Growth System teaches the exact system I use to generate 7M+ impressions a year and 70% of my B2B pipeline.

🏅 Are you in charge of GTM and responsible for leading others? Grab the GTM Masterclass (6 hours of training, end-to-end GTM explained on examples, guided workshops) to get your team up and running in no time.

🤝 Want to work together? ⏩ Check out the options and let me know how we can join forces.

Really well put together

Ok